Finally Hangfire 1.8.0 is here. The latest version offers a set of great new features like first-class queue support for background jobs, the enhanced role of the Deleted state that now supports exceptions, more options for continuations to implement even try/catch/finally semantics, better defaults to simplify the initial configuration and various Dashboard UI improvements like full-width and optional dark mode support.

The complete list of changes made in this release is available on GitHub.

Contents

- Breaking Changes

- First-class Queue Support

- Dashboard UI Improvements

- Enhanced “Deleted” State

- Storage API Improvements

- SQL Server Storage

- Culture & Compatibility Level

- Deprecations in Recurring Jobs

Breaking Changes

Upgrade guide is available

Please check the Upgrading to Hangfire 1.8 documentation article for details.

| Package | Changes |

|---|---|

| Hangfire.Core |

|

| Hangfire.SqlServer |

|

| Hangfire.AspNetCore |

|

Encryption is enabled by default in Microsoft.Data.SqlClient

Microsoft.Data.SqlClient package has breaking changes and encryption is enabled by default. You might need to add TrustServerCertificate=true option to a connection string if you have connection-related errors or stay with System.Data.SqlClient package. More details can be found in this issue on GitHub.

First-class Queue Support

From the first versions of Hangfire, the “Queue” property was related only to a specific instance of the “Enqueued” state but not to a background job itself. This factor often leads to confusion in different scenarios with dynamic queueing, despite there being solutions like static or dynamic QueueAttribute or other extension filters that offer help in persisting a target queue.

Background Jobs

Now it is possible to explicitly assign a queue manually for a background job when creating it using the new method overloads in both BackgroundJob class and IBackgroundJobClient interface. In this case, the given queue will be used every time the background job is enqueued unless overridden by state filters like the QueueAttribute.

var id = BackgroundJob.Enqueue<IOrdersServices>("critical", x => x.ProcessOrder(orderId));

BackgroundJob.ContinueJobWith<IEmailServices>(id, "email", x => x.SendNotification(orderId));These changes can be beneficial for a microservice-based approach or when manual load-balancing is required.

Perhaps it’s also worth noting that new changes allow delayed background jobs with an explicit queue specified to be placed to a job queue and processed by a worker without a “Scheduled → Enqueued” state transition, making such jobs be processed with much better throughput.

Recurring Jobs

Specifying an explicit queue name for recurring-based background jobs is also possible, as shown in the snippet below. However, please note that recurring jobs will not be filtered based on such queue, and queue name will be used only when creating a background job on its schedule. So unfortunately, it’s still impossible to use the same storage for recurring jobs from different code bases, but the new release also contains changes that will make this possible.

RecurringJob.AddOrUpdate("my-id", "critical", () => Console.WriteLine("Hello, world"), "* * * * *");Storage Support Required

The new feature requires job storage to persist a new field, which storage may not support out-of-the-box. That’s why additional storage support is required. Otherwise, the NotSupportedException will be thrown. So upgrade of the job storage package is likely needed. Currently the following storages support this feature:

- Hangfire.SqlServer 1.8.0

- Hangfire.InMemory 0.4.0

- Hangfire.Pro.Redis 3.0.0

- Hangfire.Mongo 1.9.4

Dashboard UI Improvements

Full-width Support

Dashboard UI page is now fully responsive and will fit the full-screen size to display more information. Lengthy background or recurring job names, a large list of arguments, or tables with many columns don’t lead to problems now. The new layout is enabled by default.

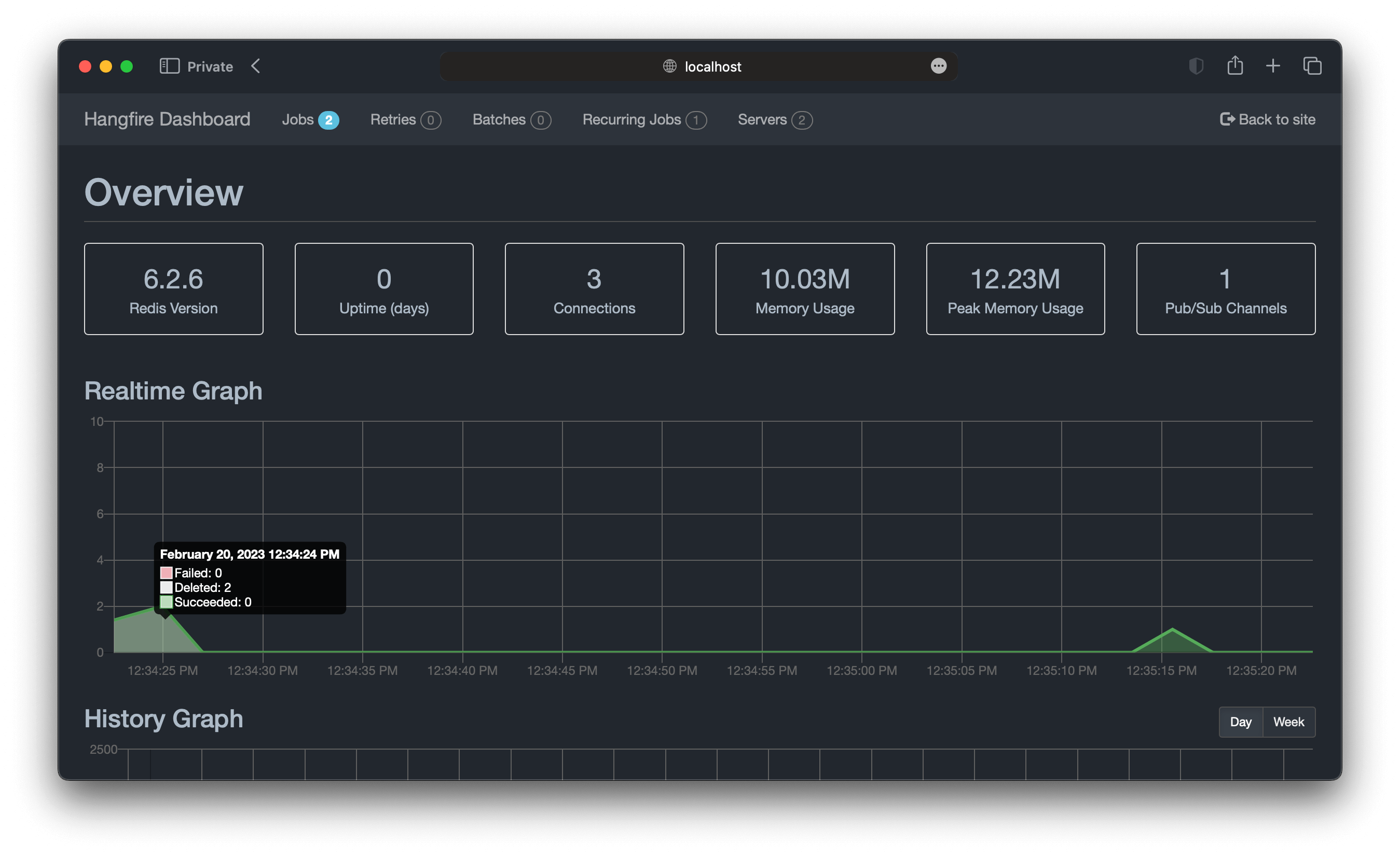

Dark Mode Support

Dark mode support comes for the Dashboard UI with this release. It is enabled by default and is triggered automatically based on system settings, allowing automatic transitions.

Custom CSS and JavaScript Resources

Adding custom CSS and JavaScript files to avoid possible Content Security Policy-related issues in extensions for the Dashboard UI is now possible. These files can be added as embedded resources to an extension assembly, and GetManifestResourceNames method can be used to determine the path names.

var assembly = typeof(MyCustomType).GetTypeInfo().Assembly;

// Call the `assembly.GetManifestResourceNames` method to learn more about paths.

configuration

.UseDashboardStylesheet(assembly, "MyNamespace.Content.css.styles.css")

.UseDashboardJavaScript(assembly, "MyNamespace.Content.js.scripts.js")Custom Renderers on the Job Details Page

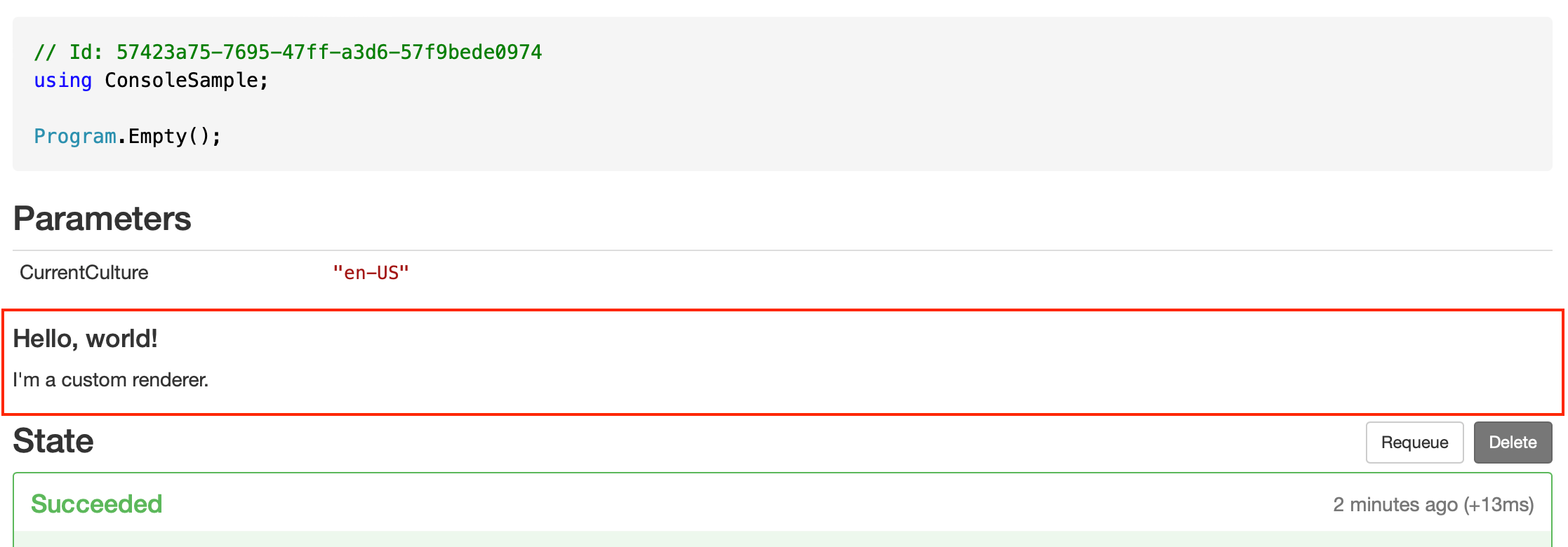

The “Job Details” page became extensible. Custom sections can now be added by calling the UseJobDetailsRenderer method that takes an integer-based ordering parameter and a callback function with JobDetailsRendererDto parameter that contains all the necessary details about the page itself and a job being displayed.

configuration

.UseJobDetailsRenderer(10, dto => new NonEscapedString("<h4>Hello, world!</h4><p>I'm a custom renderer.</p>"))After calling a method above, a new section appears on the “Job Details” page under the “Parameters” and above the “States” sections. Please find an example below.

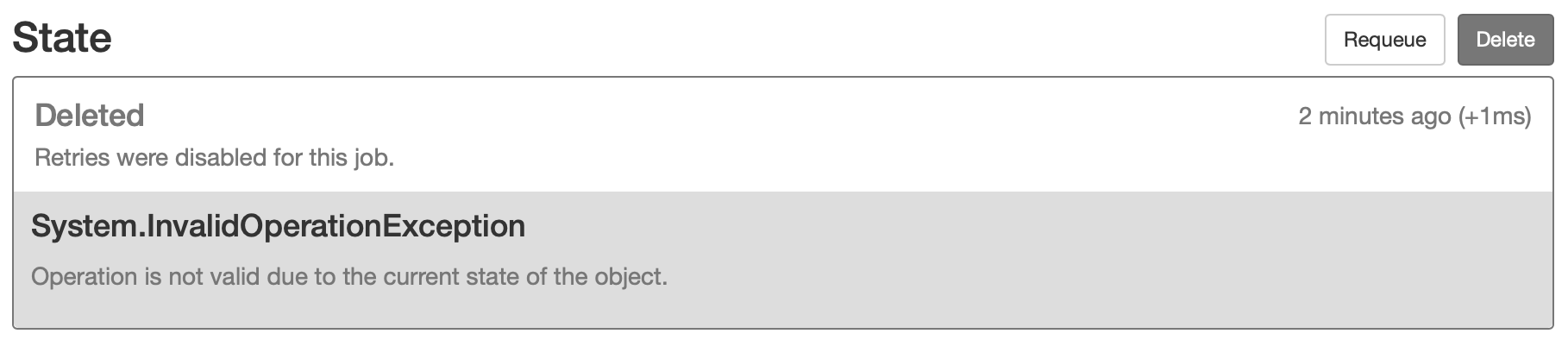

Enhanced “Deleted” State

Now we can pass exception information to the “Deleted” state, making it implement the “fault” semantics as a final state. Background jobs in the “Deleted” state will automatically expire, unlike jobs in the “Failed” state, which is not considered a final one.

The AutomaticRetry filter automatically passes an exception to a deleted state when all retry attempts are exhausted. It is also possible to pass exceptions manually when creating an instance of the DeletedState class. The stack trace isn’t persisted to avoid data duplication since it’s already preserved in a “Faulted” state. Only type information, message, and inner exceptions (if any) persisted.

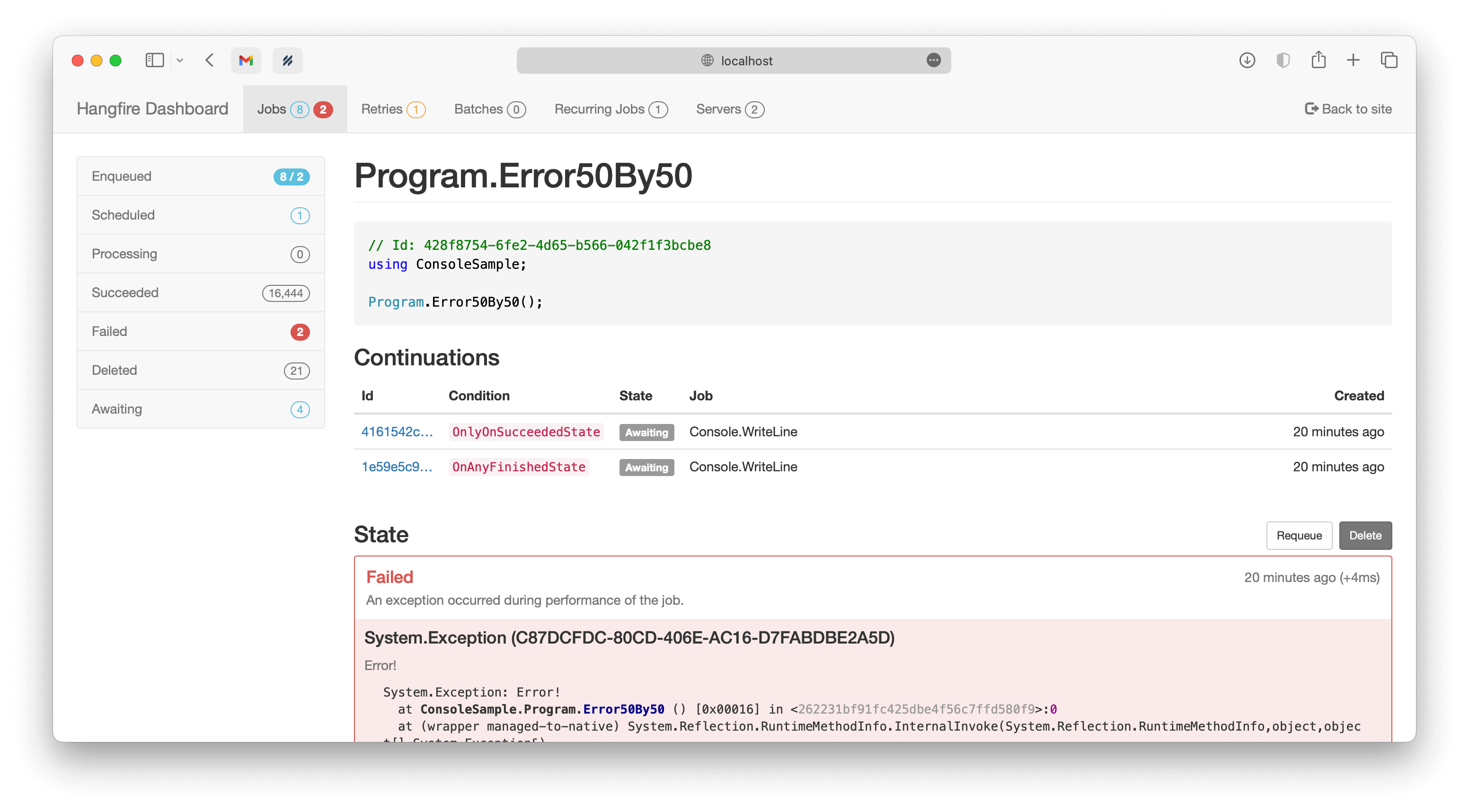

Continuation options enumeration was also extended. It is now possible to create continuations explicitly for the “Deleted” state with the JobContinuationOptions’s OnlyOnDeletedState option or even use it for multiple values in the future since JobContinuationOptions now implement the semantics of the flags.

Try/Catch/Finally Implementation

We now have everything to build try/catch/finally background jobs and even pass results or exceptions to antecedent background jobs as their arguments. We should use the UseResultsInContinuations method to enable this feature and apply FromResult or FromException attributes to corresponding parameters.

configuration

.UseResultsInContinuations()As an example, we can create the following methods, where ExceptionInfo class (from the Hangfire namespace) implements the minimal exception information and bool type as a result of the Try job and corresponding parameter of the successful continuation.

public static bool Try() { /* ... */ }

public static void Catch([FromException] ExceptionInfo exception) { /* ... */ }

public static void Finally() { /* ... */ }

public static void Continuation([FromResult] bool result) { /* ... */ }After introducing all the methods, let’s create background jobs for them. Please note that we create jobs non-atomically since they are not part of a batch. We pass default keywords as arguments for continuations, and actual values will be used at run-time.

var id = BackgroundJob.Enqueue(() => Try());

// "Catch" background job

BackgroundJob.ContinueJobWith(id, () => Catch(default),

JobContinuationOptions.OnlyOnDeletedState);

// "Finally" background job

BackgroundJob.ContinueJobWith(id, () => Finally(),

JobContinuationOptions.OnAnyFinishedState);

// Continuation on success

BackgroundJob.ContinueJobWith(id, () => Continuation(default),

JobContinuationOptions.OnlyOnSucceededState);Batches feature from Hangfire Pro allows the creation of the whole block atomically, so either all background jobs or none of them will be created on failure.

BatchJob.StartNew(batch =>

{

var id = batch.Enqueue(() => Try());

batch.ContinueJobWith(id, () => Catch(default), JobContinuationOptions.OnlyOnDeletedState);

batch.ContinueJobWith(id, () => Finally(), JobContinuationOptions.OnAnyFinishedState);

batch.ContinueJobWith(id, () => Continuation(default), JobContinuationOptions.OnlyOnSucceededState);

});Storage API Improvements

Single time authority for schedulers. Storage now can act as a time authority for DelayedJobScheduler and RecurringJobScheduler background processes. When storage implementation supports this feature, these components will use the current UTC time of the instance instead of the current server’s UTC time. This feature makes scheduled processing less sensitive to time synchronization issues.

Fewer network roundtrips. A lot of network calls during processing are related to background job parameters. Since they are small enough and most aren’t updated often, we can cache them in the new ParametersSnapshot property of the JobDetailsDto and BackgroundJob classes. The GetJobParameter method now supports the allowStale argument that we can use to retrieve a cached version instead, eliminating additional network calls.

More transactional methods. Transaction-level distributed locks were added in this version, allowing more features to be implemented in extension filters without sacrificing atomicity. Also, it is now possible to create a background job inside a transaction for storage that generates identifiers on the client side, so it will be possible to reduce the number of roundtrips to storage.

Feature-based flags to smooth the transition, so every new feature is optional to avoid breaking changes for storage implementations.

SQL Server Storage

Breaking Changes

Since Microsoft.Data.SqlClient package is the “flagship data access driver for SQL Server going forward”, it will be used by the Hangfire.SqlServer package by default when referenced in the target project. Automatic detection is performed in run-time.

Encryption is enabled by default in Microsoft.Data.SqlClient

Microsoft.Data.SqlClient package has breaking changes and encryption is enabled by default. You might need to add TrustServerCertificate=true option to a connection string if you have connection-related errors or stay with System.Data.SqlClient package. More details can be found in this issue on GitHub.

In this version, neither Microsoft.Data.SqlClient nor System.Data.SqlClient package is referenced as a dependency by the Hangfire.SqlServer package anymore, so the particular package needs to be referenced manually if you prefer to stay with it or postpone the transition to a newer package. You can use the following snippet with the * as a version to always use the latest one.

<ItemGroup>

<PackageReference Include="Microsoft.Data.SqlClient" Version="*" />

<!-- OR -->

<PackageReference Include="System.Data.SqlClient" Version="*" />

</ItemGroup>

Better Defaults

We’ve introduced many changes in the previous versions of the “Hangfire.SqlServer” storage to make it faster and more robust. However, they weren’t enabled by default to ensure first they were working reliably. Now, after they prove themselves useful and stable enough, we can enable them by default to avoid complex configuration options.

- Default isolation level is finally set to

READ COMMITTED. - Command batching for transactions is now enabled by default.

- Transactionless fetching based on sliding invisibility timeout is used by default.

- Queue poll interval is set to the

TimeSpan.Zerovalue that defaults to200ms. - Schema-related options such as

DisableGlobalLockswill be detected automatically using the newTryAutoDetectSchemaDependentOptionsoption enabled by default.

Schema 8 and Schema 9 Migrations

This schema is an optional but recommended migration that contains the following changes. Please note that it requires the EnableHeavyMigrations option to be enabled in SqlServerStorageOptions to apply the migration automatically since it can take some time when Counter or JobQueue tables contain many records.

Schema 8

-

Countertable now has a clustered primary key to allow replication on Azure; -

JobQueue.Idcolumn length was changed to thebiginttype to avoid overflows; -

Server.Idcolumn’s length was changed to200to allow lengthy server names; -

HashandSettables now include theIGNORE_DUP_KEYoption to make upsert queries faster.

Schema 9

-

Statetable nows has a non-clustered index on itsCreatedAtcolumn.

As always, you can apply the migration manually by downloading it from GitHub using this link.

Culture & Compatibility Level

Hangfire automatically captures CultureInfo.CurrentCulture and CultureInfo.CurrentUICulture and preserves their two-letter codes as background job parameters using the CaptureCaptureAttribute filter to use the same culture information in a background job as in the original caller context. The downside of such defaults can be heavily duplicated data for each background job.

Of course, we can remove that filter to avoid capturing anything and save some storage space for applications with a single culture only. But now we can optimize the case when CurrentCulture equals CurrentUICulture with the new compatibility level and set the default culture to avoid saving culture-related parameters at all if an application uses primarily the same culture.

Compatibility Level

After all our servers upgraded to version 1.8, we can set the following compatibility level to stop writing the CurrentUICulture job parameter when it’s the same as the CurrentCulture one. Please note that version 1.7 and lower don’t know what to do in this case and will throw an exception, so we should upgrade first.

configuration

.SetDataCompatibilityLevel(CompatibilityLevel.Version_180)Default Culture

We can also go further and stop writing culture-related parameters when our application deals mainly with a single culture.

Two-step deployment required

When there are multiple servers, we should deploy the changes in two steps. Otherwise, old servers will not be instructed on what to do when job parameters are missing.

- Deploy with

UseDefaultCulture(/* Culture */); - Deploy with

UseDefaultCulture(/* Culture */, captureDefault: false).

We can set the default culture by calling the UseDefaultCulture. With a single argument, it will use the same culture for both CurrentCulture and CurrentUICulture, but there’s an overload to set both explicitly.

configuration

.UseDefaultCulture(CultureInfo.GetCultureInfo("en-US"))After calling the line above, the CaptureCultureAttribute filter will use the configured default culture when CurrentCulture or CurrentUICulture background job parameters are missing for a particular background job.

After we instructed what to do when the referenced parameters are missing and deployed the changes, we can pass the false argument for the captureDefault parameter to avoid preserving the default culture.

configuration

.UseDefaultCulture(CultureInfo.GetCultureInfo("en-US"), captureDefault: false)Deprecations in Recurring Jobs

Deprecations are mainly related to recurring background jobs and are made to avoid confusion when explicit queue names are used.

Implicit Identifiers Deprecated

Methods with implicit recurring job identifiers are now obsolete. While these methods make it easier to create a recurring job, sometimes they cause confusion when we use the same method to create multiple recurring jobs, but only a single one is created. With queues support for background jobs, there can be even more difficulties. So the following calls:

RecurringJob.AddOrUpdate(() => Console.WriteLine("Hi"), Cron.Daily);Should be replaced with the following ones, where the first parameter determines the recurring job identifier:

RecurringJob.AddOrUpdate("Console.WriteLine", () => Console.WriteLine("Hi"), Cron.Daily);For non-generic methods, the identifier is {TypeName}.{MethodName}. For generic methods, it’s much better to open the Recurring Jobs page in the Dashboard UI and check the identifier of the corresponding recurring job to avoid any mistakes.

Optional Parameters Deprecated

It is impossible to add new parameters to optional methods without introducing breaking changes. So to make the new explicit queues support consistent with other new methods in BackgroundJob / IBackgroundJobClient types, methods with optional parameters became deprecated. So the following lines:

RecurringJob.AddOrUpdate("my-id", () => Console.WriteLine("Hi"), Cron.Daily, timeZone: TimeZoneInfo.Local);Should be replaced with an explicit RecurringJobOptions argument.

RecurringJob.AddOrUpdate("my-id", () => Console.WriteLine("Hi"), Cron.Daily, new RecurringJobOptions

{

TimeZone = TimeZoneInfo.Local

});The RecurringJobOptions.QueueName property is deprecated

New methods with an explicit queue name are suggested to use instead when support is added for your storage. This will also make re-queueing logic work as expected, with queueing to the same queue. So the following calls:

RecurringJob.AddOrUpdate("my-id", () => Console.WriteLine("Hi"), Cron.Daily, queue: "critical");Should be replaced by these ones:

RecurringJob.AddOrUpdate("my-id", "critical", () => Console.WriteLine("Hi"), Cron.Daily); by

by